Volume Rendering Improvements in VTK

As reported back in July, we are in the process of a major overhaul of the rendering subsystem in VTK. The July article focused primarily on our efforts to move to OpenGL 2.1+ to support faster polygonal rendering. This article will focus on our rewrite of the vtkGPURayCastMapper class to provide a faster, more portable, and more easily extensible volume mapper for regular rectilinear grids.

VTK has a long history of volume rendering and, unfortunately, that history is evident in the large selection of classes available to render volumes. Each of these methods was state-of-the-art at the time, but given VTK’s 20+ year history, many of these methods are now quite obsolete. One goal of this effort is to reduce the number of volume mappers to ideally just two: one that supports accelerated rendering on graphics hardware and another that works in parallel on the CPU. In addition, the vtkSmartVolumeMapper would help application developers by automatically choosing between these techniques based on system performance.

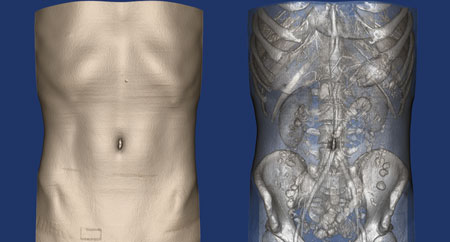

Figure 1. Sample dataset volume rendered using the new vtkGPURayCastMapper

In this first phase, we have created a replacement for the vtkGPURayCastMapper. Currently, this is available for testing from the VTK git repository. General instructions on how to build VTK from the source are available at this URL: http://www.vtk.org/Wiki/VTK/Git. To build the new mapper, enable the Module_vtkRenderingVolumeOpenGLNew module in cmake, via -DModule_vtkRenderingVolumeOpenGLNew=ON, in ccmake or cmake-gui. Once built, it can be used via vtkSmartVolumeMapper or instantiated directly. When sufficient testing by the community has been performed, this class will replace the old vtkGPURayCastMapper. In addition, we are adding this new mapper to the OpenGL2 module. Availability of the new mapper with OpenGL2 module will improve the management of textures in the mapper and, eventually, benefit both forms of rendering (geometry and volume) by sharing common code between them.

Technical Details

The new vtkGPURayCastMapper uses a ray casting technique for volume rendering. Algorithmically, it is quite similar to the older version of this class (although with a fairly different OpenGL implementation since that original class was first written over a decade ago). We chose to use ray casting due to the flexibility of this technique, which allows us to support all the features of the software ray cast mapper but with the acceleration of the GPU.

Ray casting is inherently an image-order rendering technique, with one or more rays cast through the volume per image pixel. VTK is inherently an object-order rendering system, where all graphical primitive (points, lines, triangles, etc.) represented by the vtkProps in the scene are rendered by the GPU in one or more passes (with multiple passes needed to support advanced features such as depth peeling for transparency).

The image-order rendering process for the vtkVolume is initiated when the front-facing polygons of the volume’s bounding box are rendered with a custom fragment program. This fragment program is used to cast a ray through the volume at each pixel, with the fragment location indicating the starting location for that ray. The volume and all the various rendering parameters are transferred to the GPU through the use of textures (3D for the volume, 1D for the various transfer functions) and uniform variables. Steps are taken along the ray until the ray exits the volume, and the resulting computed color and opacity are blended into the current pixel value. Note that volumes are rendered after all opaque geometry in the scene to allow the ray casting process to terminate at the depth value stored in the depth buffer for that pixel (and, hence, correctly intermix with opaque geometry).

The new vtkGPURayCastMapper supports the following features:

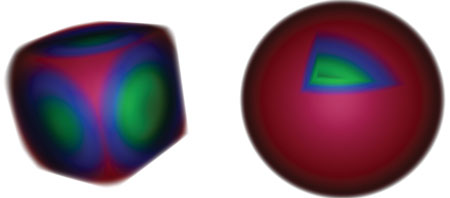

Cropping: Two planes along each coordinate axis of the volume are used to define 27 regions that can be independently turned on (visible) or off (invisible) to produce a variety of different cropping effects, as shown in Figure 2. Cropping is implemented by determining the cropping region of each sample location along the ray and including only those samples that fall within a visible region.

Figure 2. A sphere is cropped using two different configurations of cropping regions

Wide Support of Data Types: The vtkGPURayCastMapper supports most data types stored as either point or cell data. The mapper supports one through four independent components. It also supports two and four component data representing IA or RGBA.

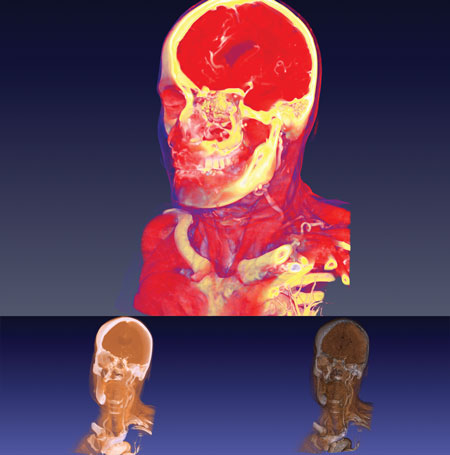

Clipping: A set of infinite clipping planes can be defined to clip the volume to reveal inner detail, as shown in Figure 3. Clipping is implemented by determining the visibility of each sample along the ray according to whether that location is excluded by the clipping planes.

Figure 3. Top: An example of an oblique clipping plane. Bottom: A pair of parallel clipping planes clip the volume,

rendered without (left) and with (right) shading

Blending Modes: This mapper supports composite blending, minimum intensity projection, maximum intensity projection, and additive blending. See Figure 4 for an example of composite blending, maximum intensity projection, and additive blending on the same data.

Figure 4. Blending modes using the new vtkGPURayCastMapper (from left to right): Composite, Maximum Intensity Projection, Additive

Masking: Both binary and label masks are supported. With binary masks, the value in the masking volume indicates visibility of the voxel in the data volume. When a label map is in use, the value in the label map is used to select different rendering parameters for that sample. See Figure 5 for an example of label data masks.

Figure 5. Example of a label data map to mask the volume

Figure 6. Volume rendering without (left) and with (right) gradient magnitude opacity-modulation (using the same scalar opacity transfer function)

Opacity Modulated by Gradient Magnitude: A transfer function mapping the magnitude of the gradient to an opacity modulation value can be used to essentially perform edge detection (de-emphasize homogenous regions) during rendering. See Figure 6 for an example of rendering with and without the use of a gradient opacity transfer function.

Future Work

At this point, we have a replacement class for vtkGPURayCastMapper that is more widely supported, faster, more easily extensible, and supports the majority of the features of the old class. In the near future, our goal is to ensure that this mapper works as promised by integrating it into existing applications such as ParaView and Slicer. In addition, we are working toward adding this class to the new OpenGL2 rendering system in VTK, which requires a few changes in how we manage textures. Once these tasks are complete, we have some ideas on new features we would like to add to this mapper (outlined below). We would also like to solicit feedback from folks using the VTK volume mappers. What features do you need? Drop us a line at kitware@kitware.com, and let us know.

Improved Lighting / Shading: One obvious improvement that we would like to make is to more accurately model the VTK lighting parameters. The old GPU ray cast mapper supported only one light (due to limitations in OpenGL at the time the class was written). The vtkFixedPointRayCastMapper does support multiple lights, but only with an approximate lighting model, since gradients are precomputed and quantized, and shading is performed for each potential gradient direction regardless of fragment location. This new vtkGPURayCastMapper will allow us to more accurately implement the VTK lighting model to produce high quality images for publication. In addition, we can adapt our shading technique to the volume, reducing the impact of noisy gradient directions in fairly homogenous regions of the data by de-emphasizing shading these regions.

Volume Picking: Currently, picking of volumes in VTK is supported by a separate vtkVolumePicker class. The new vtkGPURayCastMapper can be extended to work with VTK’s hardware picker to allow seamless picking in a scene that contains both volumetric and geometric objects. In addition, supporting the picking directly in the mapper will ensure that “what you see is what you pick,” since the same blending code would be used both for rendering the volume and for detecting if the volume has been picked.

2D Transfer Functions: Currently, volume rendering in VTK uses two 1D transfer functions, mapping scalar value to opacity and gradient magnitude to opacity. For some application areas, better rendering results can be obtained by using a 2D table that maps these two parameters into an opacity value. Part of the challenge in adding a new feature such as this to volume rendering in VTK is simply the number of volume mappers that have to be updated to handle it (either correctly rendering according to these new parameters or at least gracefully implementing an approximation). Once we have reduced the number of volume mappers in VTK, then adding new features such as this will become more manageable.

Support for Depth Peeling: Currently, VTK correctly intermixes volumes with opaque geometry. For translucent geometry, you can obtain a correct image only if all translucent props can be sorted in depth order. Therefore, no translucent geometry can be inside a volume, as it would be, for example, when depicting a cut plane location with a 3D widget that represents the cutting plane as a translucent polygon. Nor can a volume be contained within a translucent geometric object, as it would be if, for example, the outer skin of a CT data set was rendered as a polygonal isosurface with volume mappers used to render individual organs contained within the skin surface. We hope to extend the new vtkGPURayCastMapper to support the multipass depth peeling process, allowing for correctly rendered images with intersecting translucent objects.

Improved Rendering of Labeled Data: Currently, VTK supports binary masks and only a couple of very specific versions of label mapping. We know that our community needs more extensive label mapping functionality – especially for medical datasets. Labeled data requires careful attention to the interpolation method used for various parameters. (You may wish to use linear interpolation for the scalar value to look up opacity, but, perhaps, select the nearest label to look up the color.) We plan to solicit feedback from the VTK community to understand the sources of labeled data and the application requirements for visualization of this data. We then hope to implement more comprehensive labeled data volume rendering for both the CPU and GPU mappers.

Mobile Support: The move to OpenGL 2.1 means that VTK will run on iOS and Android devices. Although older devices do not support 3D textures, newer devices do. Therefore, this new volume mapper should, theoretically, work. We hope to test and refine our new mapper so that VTK is ready to be used for mobile volume rendering applications.

Acknowledgements

We would like to recognize the National Institutes of Health for sponsoring this work under the grant NIH R01EB014955 “Accelerating Community-Driven Medical Innovation with VTK.” We would like to thank Marcus Hanwell and Ken Martin, who are tirelessly modernizing VTK by bringing it to OpenGL 2.1 and mobile devices and who have been providing feedback on this volume rendering effort.

The Head and Torso datasets used in this article are available on the Web at http://www.osirix-viewer.com/datasets.

Lisa Avila is Vice President, Commercial Operations at Kitware. She is one of the primary architects of VTK’s volume visualization functionality and has contributed to many volume rendering efforts in scientific and medical fields ranging from seismic data exploration to radiation treatment planning.

Aashish Chaudhary is a Technical Leader on the Scientific Computing team at Kitware. Prior to joining Kitware, he developed a graphics engine and open-source tools for information and geo-visualization. His interests include software engineering, rendering, and visualization.

Sankhesh Jhaveri is an R&D Engineer on the Scientific Visualization team at Kitware. He has a wide range of experience working on open-source, multi-platform, medical imaging, and visualization systems. At Kitware, Sankhesh has been actively involved in the development of VTK, ParaView, Slicer4, and Qt-based applications.

This looks very promising ! Cannot wait to have it released !

Great article and impressive improvements on the way indeed. It’s mentioned that the new vtkGPUVolumeRayCastMapper (having set -DModule_vtkRenderingVolumeOpenGLNew=ON) would support one through four independent components. Is this feature functional yet? I’ve tried the git version but the mapper has no support for the independent components yet.

Dear Carlos,

Many thanks for your encouraging remarks and trying it out. The independent component is not completely supported yet but it would be very easy to add. I will push a branch this week for that. On this note, please try the OpenGL2 backend as that mapper is now more uptodate and has many bug fixes.

To try that, you have to turn on in ccmake /cmake like this:

VTK_RENDERING_BACKEND=OpenGL2

Let me know how it goes.

Dear Victor,

the code is already in master. Please try the OpenGL2 backend. Many thanks for reading this article. Looking forward to your feedback.

Dear Carlos, I am getting close to the independent components in GPU. Once I publish a branch, I will request you to review it. As usual, please try the OpenGL2 backend for the latest changes as we have pushed many fixes / improvements in the last few weeks.

Thank you Aashish, I look forward to reviewing this. I will give it a go and try what has been implemented so far.

Many thanks,

Carlos

Carlos, Our initial support for independent components in GPU mapper is in master now. Can you try it?

Thanks,

Thank you Aashish, I’ve just had the chance to try this today. Having the vtkOpenGLGPUVolumeRayCastMapper subclassed from vtkGPUVolumeRayCastMapper still restricts the rendering of multiple independent components. This is because vtkGPUVolumeRayCastMapper::ValidateRender() is automatically invoked during the rendering processes imposing the condition of “Only one component scalars, or four component with non-independent components, are supported by this mapper” – (Line:375).

Am I following a wrong approach here to use the OpenGL2 volume raycasting? VTK_RENDERING_BACKEND was set to OpenGL2, but the vtkRenderingVolumeOpenGLNew was set to OFF because CMake reported it can’t be ON while the backend is OpenGL2.

Thank you.does new vtkGPURayCastMapper support multiple image volume rendering?

Hi guys,

just as Jalal Sadeghi (and for sure many others), I would be very interested in multiple volumes rendering? Any plans to add this functionality soon? Thank you.

Best,

Chavdar

You can render multiple volumes right now as long as they don’t overlap in 3D space. Be sure to use the vtkFrustumCoverageCuller to sort your props in the scene in a back-to-front order. If all your data is defined on the same grid you can render that as well – just store the data as components in one vtkImageData. You can have up to four components, and each has its own transfer functions with blending weights to control how much you see of each component.

Currently we don’t have plans to support multiple, overlapping (but not defined on the same grid) volumes. It can be done (although we have some concerns about performance if the combined set of volumes don’t all fix into GPU texture memory) but we don’t have a funded project that needs this feature right now.

Thank you for the fast reply. I meant overlapping volumes, possibly defined on different grids. By the way, you should add this info somewhere in the VTK documentation. Maybe by the AddViewProp/AddVolume methods or somewhere else. It took me quite a while to figure out why I could see only the first volume added to the renderer – the other ones where within the bbox of the first one and thus completely hidden. This is quite an important piece of information and shouldn’t be missing. The VTK User’s Guide also doesn’t say anything about it.

Dear all, we (a research team in Germany) are strongly interested in rendering multiple data volumes in the same view with VTK. We chose VTK for our 3D visualisation project on beam-plasma interactions due to its extended functionality and features, its open source character and because it is written in C++ with bindings for python.

However, we will require to show multiple volumes in the same view (representing the data of different particle species in our simulations).

As Chavdar Papazov commented before, we can only see the first volume added to the render.

We are currently using a vtkGPUVolumeRayCastMapper object for each data set, which is then connected to a vtkVolume. The different vtkVolume objects are then added to the vtkRenderer.

We have tried to use different vtkRenderer objects for the different vtkVolumes, but still only the first volume on the first vtkRenderer is displayed.

Searching in the web, we have found the following (potential) solution:

https://github.com/bozorgi/VTKMultiVolumeRayCaster

which is published here:

http://www.ncbi.nlm.nih.gov/pubmed/24841148

It consists on new vtk classes to handle multiple volumes rendering.

As it might work well for our purposes, we would like to ask your opinion about this new classes.

Are you planning to incorporate something like this in the official VTK distribution?

If not, we will be interested in incorporating this new class into our personal VTK installation.

Then the question is: Will be the python bindings automatically generated for these new classes?

Thanks a lot for your support!

How rendering a SEG-Y format (SEG)? Siimlar to OpendTect.

Dear Frank, rendering a SEG-Y format requires a vtk reader. We have initial work done here: https://github.com/OpenGeoscience/vtksesame but it may require some good effort to look at all corner cases. Let us know if you want to look at the code and need any help

Can we use custom(user defined) shaders with vtkOpenGLGPUVolumeRayCastMapper or vtkGPUVolumeRayCastMapper?

Neha – it is not there yet but it is in the planning. How soon do you need this?

Thankyou Aashish for your reply. Actually i need it pretty soon. Currently we are using VTK v 6.3.0. However I have come across (protected)function vtkOpenGLGPUVolumeRayCastMapper::DoGPURender() in VTKv7.1.1. This function takes vtkShaderProgram as an input argumnet. Can this function be of any help. Is there any other way of using user defined shader with vtkVolume?

AttributeError: module ‘vtk’ has no attribute ‘vtkVolumeRayCastCompositeFunction’,怎么解决这个问题呢?